Running Large Language Models (LLMs) locally has increasingly become a sought-after skill set in the realms of artificial intelligence (AI), data science, and machine learning.

Whether for privacy reasons, specific research tasks, or to simply level up your coding capabilities, understanding how to operate models like LLAMA3, Phi3, Gemma, and others on your local machine opens up a world of possibilities.

By harnessing tools such as Docker, OLLAMA, and open Web UIs, enthusiasts and professionals alike can run LLMs, including small language models like Mistral 7b or Code Llama, as well as larger frameworks, directly from the comfort of their own computing environments.

This guide aims to demystify the process, covering key concepts from setting up your local model, utilizing APIs for seamless integration, to optimizing your setup with NVIDIA GPU for enhanced performance.

We’ll explore the vector databases crucial for managing the generative AI’s extensive data needs, alongside open-source tools that align with AI regulation standards. Diving into different models, from the expansive knowledge parameters of large language models to the nimbleness of small language models, we’ll equip you with the know-how to run LLMs efficiently.

Whether you’re interested in the latest in natural language processing, AI chatbots, text generation, or are eyeing the capabilities of OpenAI models, Google Bard, or Meta AI’s offerings, this guide will serve as your comprehensive walkthrough, peppered with recommended readings to deepen your understanding.

With an eye on practicality, we’ll address the handling of sensitive data and the role of consumer-grade hardware in achieving your goals, ensuring you’re well-prepared for the task ahead.

Checkout our Free AI Tool;

- Free AI Image Generator

- Free AI Text Generator

- Free AI Chat Bot

- 10,000+ ChatGPT, Cluade, Meta AI, Gemini Prompts

How to Run LLM Locally On Windows, iOS, Linux

Pre-requisites FOR Windows

Before we dive into the world of Large Language Models (LLMs) on Windows, there’s a little setup required to make everything run smoothly. First, you’ll need to install Windows Subsystem for Linux (WSL). This can easily be done by opening your command prompt and typing:

WSL –install

This simple command sets the stage for running Linux-based applications directly on Windows, a vital step for LLM enthusiasts.

Install Ollama

Now, to get Ollama up and running on your machine, follow these steps:

- Head over to the Ollama download page at Ollama Download.

- Choose your Operating System, which in this case would be Windows (with WSL), and hit the download button.

- Follow the on-screen instructions to install Ollama on your PC. This step is crucial for managing LLMs efficiently.

Install Docker

Next up, Docker! This tool is essential for containerizing applications, making the deployment of LLMs a breeze.

- Visit the Docker Desktop page at Docker Desktop Download.

- Select the version compatible with your OS (Mac, Linux, Windows) and initiate the download.

- Proceed with the installation by following the provided on-screen instructions. This step ensures you have the right environment to run containers seamlessly.

Run Open Web UI

What is Open Web UI?

Open WebUI is a self-hosted, extensible WebUI that works entirely offline, designed for running various LLM runners, including Ollama and those compatible with OpenAI APIs. More about it can be found on their official documentation at Open WebUI Docs.

Install Open Web UI

To install Open Web UI on your system, just follow these steps:

- Open a terminal on macOS or CMD on Windows.

- Execute the following Docker command:

docker run -d -p 3000:8080 –add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data –name open-webui –restart always ghcr.io/open-webui/open-webui:main

Make sure Ollama and Docker are running before you execute this command. This process installs a Web UI interface on your system, akin to ChatGPT, but locally hosted.

- Once the installation is complete, you can access Web UI at `http://localhost:3000/`.

- Create a free account to start exploring the functionalities.

Install LLMs

To bring LLMs into your local environment:

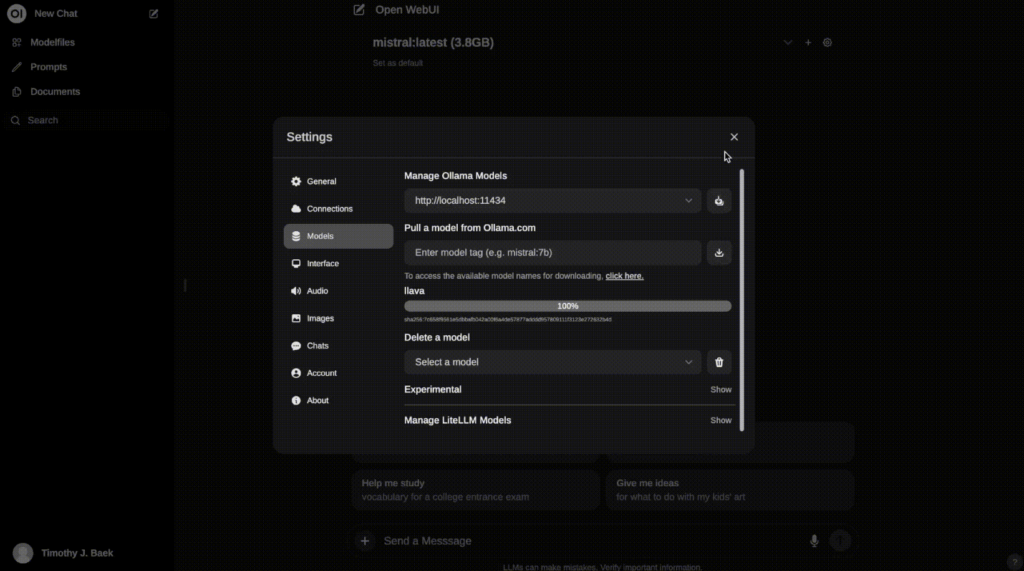

- Navigate to the settings icon in the Open Web UI.

- Go to models and input the name of the LLM you desire from the Ollama library.

- For demonstration, type “LLAMA3,” and the system will begin downloading it. This process equips your local setup with the power to run LLMs.

Run LLMs

Finally, to engage with your local LLM:

- Click on the new chat button within the Open Web UI.

- Select the model you installed, for example, LLAMA3, and submit your query.

- Voila! You’re now interacting with an AI model locally, no internet required.

This walkthrough has covered essential steps from setting up WSL, downloading and installing Ollama and Docker, to deploying and using the Open Web UI for running LLMs like LLAMA3. With your local machine now equipped with these tools and models, you’re set to explore the vast capabilities of LLMs in privacy-focused, internet-independent scenarios.

How to Run Local LLMs on iOS, Android Devices

Install ngrok

Running Local LLMs on iOS and Android devices starts with installing ngrok, a powerful tool that tunnels local servers onto the internet, making your local LLM accessible from your mobile device. Here’s how to get it set up:

- Follow the quickstart guide on ngrok’s documentation to install ngrok on your machine. This guide provides a comprehensive walkthrough for the installation process.

- Create a free account on ngrok to obtain your authtoken, which is crucial for authenticating your local ngrok client.

Run Open Web UI

Once ngrok is installed, you can proceed to make your local LLM accessible:

Link your ngrok account to your local setup by executing the command:

ngrok config add-authtoken <TOKEN>

Replace `<TOKEN>` with the actual authtoken you received when you signed up for your ngrok account.

With the Open Web UI running on your local machine, start ngrok by executing:

ngrok http http://localhost:3000

This command tunnels your local server running the Open Web UI onto the internet.

ngrok will provide you with an output that includes a forwarding URL, which looks something like this:

https://84c5df474.ngrok-free.dev -> http://localhost:8080

Copy this URL i.e. https://84c5df474.ngrok-free.dev

Now, simply paste this URL into the browser of your iOS or Android device, and voilà, you have access to your local Large Language Models (LLMs) right from your phone.

This setup turns your device into a powerful AI tool, allowing you to interact with the likes of LLAMA3, Mistral 7b, Code Llama, and other LLMs without the need for internet connectivity. By leveraging ngrok, you extend the functionalities of your LLM setup, opening up possibilities for on-the-go AI interactions, data science experimentation, and language model exploration.

Whether you’re deep into natural language processing, AI chatbots, or just fascinated by the potential of generative AI, having such immediate access transforms your mobile device into a potent tool for innovation and inquiry.

Frequently Asked Questions (FAQs)

Can I run any LLM on my local machine?

Yes, you can install and run any LLM compatible with OpenAI APIs, including the ones available in Ollama’s library.

Does running LLMs locally require an internet connection?

No, running LLMs locally does not require an internet connection. However, you do need an internet connection for initial set up and installation of tools like Ollama, Docker, and ngrok.

Can I deploy custom LLMs on my local setup?

Yes, you can deploy your custom LLMs on your local setup by following the same steps as installing any other LLM from Ollama’s library.

Conclusion

Running Large Language Models (LLMs) locally offers a unique and powerful way to engage with AI models. By setting up your local machine with tools like Ollama, Docker, Open Web UI, and ngrok, you can deploy and interact with LLMs without the need for internet connectivity.

Whether you’re a data scientist, AI enthusiast or just looking to have some fun exploring the capabilities of LLMs, give running them locally a try and see where your imagination takes you.

So go ahead, start tapping into the endless possibilities of local LLMs today!